Enterprise AI

Accelerate Machine Learning, Deep Learning and HPC Workloads

Quality Workmanship

Expert Tech Support

World-Class Service

Outstanding Value

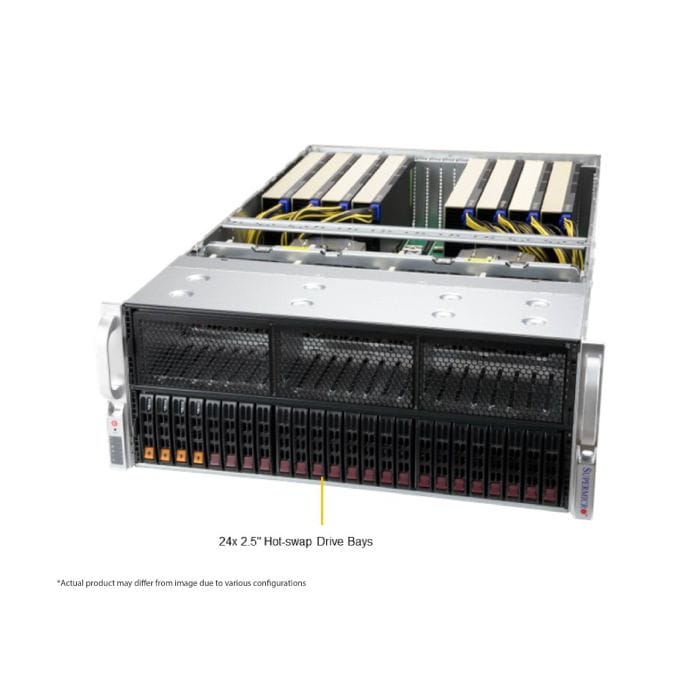

Choosing the right Enterprise AI server is critical for organizations looking to accelerate machine learning (ML), deep learning (DL), and high-performance computing (HPC) workloads. The first consideration is GPU and processing power, as AI-driven applications require high-performance accelerators. These GPUs must be paired with high-core-count CPUs to ensure seamless data processing and model training. Additionally, scalability and expandability are essential—selecting a modular server architecture with support for multiple GPUs, NVMe storage, and high-bandwidth memory (HBM) ensures that AI infrastructure can grow with increasing computational demands.

Beyond raw compute power, storage and networking play a crucial role in AI performance. Enterprise AI workloads generate massive datasets, requiring high-speed NVMe SSDs and all-flash storage arrays to minimize latency and maximize throughput. Memory bandwidth and capacity must also be optimized, with DDR5 RAM and HBM2e configurations offering the best performance for AI inference and training. In addition, low-latency networking solutions are essential for reducing bottlenecks in distributed AI environments.By considering these factors, enterprises can build scalable, high-performance AI server infrastructure that delivers faster insights, improved efficiency, and long-term ROI.